Adversarial Robustness in Two-Stage Learning-to-Defer: Algorithms and Guarantees

Y. Montreuil, A. Carlier, L.X. Ng, W.T. Ooi.

(Accepté) International Conference on Machine Learning, 2025

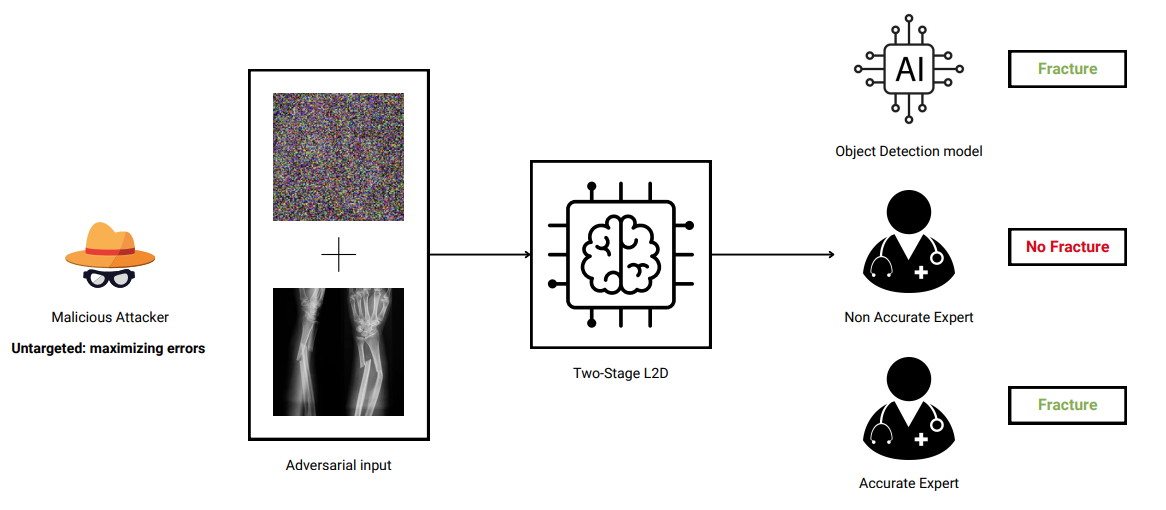

A type of attack that can occur in the Learning-to-Defer framework: the malicious attacker perturbs the input to increase the probability that the query is assigned to a less accurate expert, thereby maximizing classification errors.

Y. Montreuil, A. Carlier, L.X. Ng, W.T. Ooi.

(Accepté) International Conference on Machine Learning, 2025