Unsupervised Human Pose Estimation on Depth Images

T. Blanc–Beyne, A. Carlier, S. Mouysset, V. Charvillat.

European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases, 2020

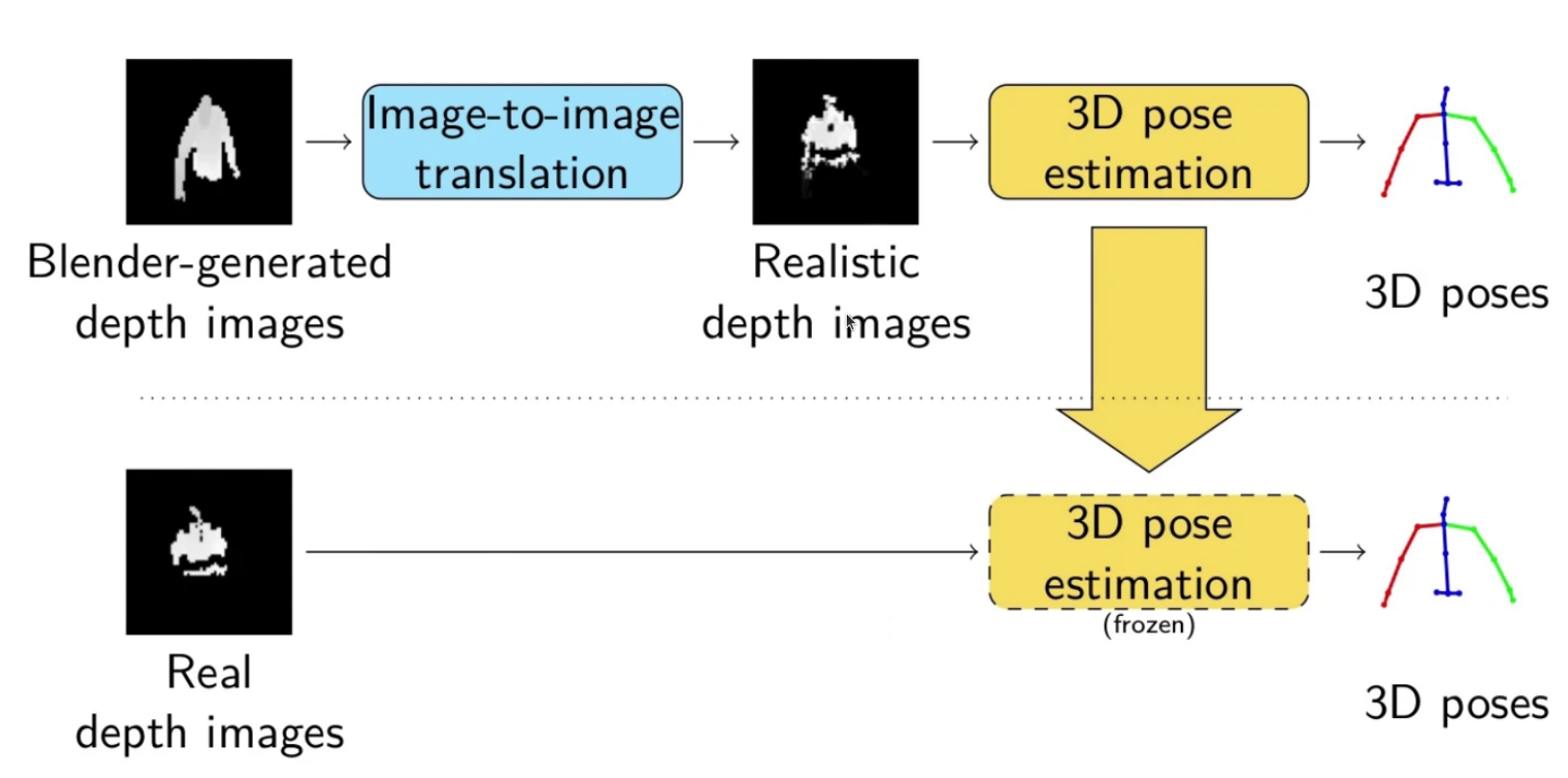

Illustration of our human pose estimation pipeline. We generate synthetic depth images on Blender using an animated human 3D model. We train a domain adaptation model to translate synthetic images into realistic depth images, that we use to train a human pose estimation model.

T. Blanc–Beyne, A. Carlier, S. Mouysset, V. Charvillat.

European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases, 2020